Apple's Foundation Models API, announced with iOS 26 at WWDC 2025, is changing how developers build smarter apps. Now, any iOS app can tap into fast, privacy-focused on-device AI for everything from language tasks to image understanding, all without sending user data off the device.

The new iOS Foundation Models framework opens a direct path to features like advanced summarization, smart text extraction, and even tool automation. Whether you want to use the Foundation Models API for custom app flows or add server-powered features through Apple's secure private cloud, this upgrade puts control in your hands while keeping privacy at the core.

In this Apple Foundation Models tutorial for iOS 26, you'll see how to use on-device AI in Swift, understand the step-by-step API integration process, and get real examples of Foundation Models in action. With the tools released at WWDC and the swift evolution of Apple Intelligence, now’s the perfect time to unlock new possibilities for your iOS projects.

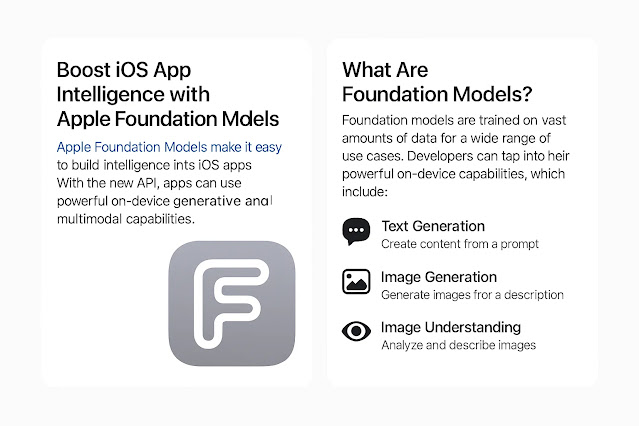

What Are Apple Foundation Models?

Apple Foundation Models are a new class of generative AI models designed to unlock advanced intelligence in apps across iOS, iPadOS, and macOS. These models drive features like natural language understanding, image analysis, and tailored user experiences—all with Apple’s strict focus on privacy, efficiency, and reliability. As you explore the Apple Foundation Models tutorial iOS 26, you’ll see how these models transform everyday tasks within your apps using on-device AI in Swift.

Let’s break down what makes the iOS Foundation Models framework integration unique and why developers are so excited about iOS 26 on-device LLM Apple technology.

Core Architecture and Capabilities

Apple’s approach blends powerful model design with hardware-optimized performance:

- On-Device Large Language Models (LLM): The primary Apple Foundation Model running on iPhones and iPads uses around 3 billion parameters. Apple specifically tunes this for real-time tasks like summarization, smart text extraction, and notification handling. The model is designed for low latency—often under one millisecond per token on the fastest Apple silicon.

- Mixture-of-Experts for Scalability: For even more complex tasks, Apple’s server-based models use a mixture-of-experts (MoE) architecture, similar in concept to advanced cloud-only LLMs. This allows some requests to tap into even greater reasoning and creativity without sacrificing speed or privacy when it matters.

- Multimodal Abilities: Apple Foundation Models aren’t just about text. They include multimodal features, meaning these models can handle images alongside language. This supports everything from visual search to photo understanding, enabling richer app experiences.

- Efficient by Design: The on-device models use special quantization (as low as 2 bits per weight), which shrinks their memory footprint dramatically without losing quality. Apple’s optimizations mean even complex AI can run entirely on your device, leveraging powerful Apple silicon.

- Built for Privacy: Apple designs these models so most data processing happens on your device. Personal information stays private, no round trips to a third-party server, and you control what, if anything, leaves your phone.

How Apple Foundation Models Differ from Cloud-Only Competitors

Apple’s AI models stand apart from typical AI solutions in several ways:

- Runs on Your Device: Unlike cloud-first AI platforms, Apple’s Foundation Models prioritize local execution. This means faster responses, less reliance on constant internet connectivity, and a much stronger privacy story—key trust factors for any modern app.

- Optimized for Apple Silicon: Apple’s chips are built from the ground up for AI workloads. The Foundation Models are heavily tuned to take advantage of this hardware, ensuring efficient use of memory and processing power. You get advanced AI features with minimal battery impact.

- Personalized and Adaptable: Thanks to modular adapters and fine-tuning techniques, you can customize these models for specific app features. You’re not stuck waiting for updates to someone else’s server model—you can make changes locally and adapt to your user’s needs quickly.

- AI Safety and Reliability: With robust, built-in safety guardrails and strong content filtering, Apple’s models strive to deliver trustworthy results. The training data is curated with privacy and quality in mind, relying on licensed sources and strict exclusion of personal or sensitive details.

Key Features Powering App Intelligence

To highlight what’s possible with iOS Foundation Models framework integration, here are some core features available for your apps:

- Fast summarization and structured data extraction.

- Advanced text, image, and multimodal processing in real time.

- Reliable, context-aware tool invocation for automations.

- Support for long-context tasks and multi-turn conversations.

- On-the-fly adapter loading for personalized experiences without retraining the full model.

These advances mean app creators can deliver AI-powered features that feel seamless, private, and consistently responsive. When you use the Apple intelligence Foundation Models API guide, you tap into smart technology with user trust and performance at its core.

Apple Foundation Models are the building blocks for the next generation of intelligent iOS apps—ready for you to explore and make your own, right from your Swift codebase.

Getting Started: Integrating the Foundation Models Framework in iOS 26

Adding the Apple Foundation Models framework to your iOS 26 project is a big step toward bringing private, efficient, and powerful AI features right into your app. Whether you’re aiming to build smart chatbots, summarize content, extract structured data, or automate tools, the setup is now more approachable than ever. In this section, you’ll walk through the essentials for iOS Foundation Models framework integration, understand how to balance on-device and cloud-based models, and see how Swift macros make working with AI responses safer and faster.

Accessing On-Device LLMs and Cloud-Based Models

Apple’s Foundation Models give you two main choices: run the AI on-device for maximum privacy and speed, or call out to Apple’s secure cloud for extra power and scalability. Knowing when and how to use each is core to building dependable, responsive features.

On-Device LLMs:

- These models work directly on your user’s iPhone, iPad, or Mac.

- They’re engineered for low latency (under one millisecond per token with Apple Silicon).

- All user data remains local, supporting privacy by design.

- Best for everyday tasks like quick summarization, extracting keywords, or personal automations.

Cloud-Based Models:

- Hosted on Apple’s Private Cloud Compute (PCC) servers.

- Larger and capable of deeper reasoning, higher accuracy, or working with much larger contexts (up to 65,000 tokens).

- Communication remains encrypted and privacy-focused, with Apple promising no human review or reuse of your app’s queries.

- Great for advanced use cases, complex automations, or when your on-device model’s quality isn’t enough.

How to Select or Set Fallbacks:

When you integrate the Foundation Models API in Swift, you can specify whether to use the device model, cloud, or let Apple decide automatically:

- Use

.onDevicewhen privacy, speed, or offline support takes priority. - Set

.automaticto let the framework choose the best available model, seamlessly switching to cloud if needed. - Configure fallbacks in your session setup; if on-device is not available or hits a limit, cloud inference can take over.

Smart Session Example:

let session = FoundationModels.Session(model: .automatic)

Keep an eye on device settings: Apple Intelligence features must be enabled, and for large jobs, cloud use may require user approval.

Quick Tips:

- Test features on real hardware, not just Simulator, to fully measure latency and behavior.

- Clearly let users know if any cloud processing is involved, building trust from day one.

Swift Macros and Guided Generation for Strong-Typed AI Responses

The Foundation Models framework makes working with AI much simpler—and safer—thanks to two key Swift macros: @Generable and @Guide. These tools transform the way your app gets and handles AI output, making responses type-safe and easy to manage.

Using @Generable and @Guide Macros

@Generable:- Lets you define Swift types (structs, enums) that directly map to the AI output.

- The model generates JSON or other structured data, filling in your defined properties with reliable answers.

- Example:

@Generable struct ActionItem { let title: String let dueDate: Date let isComplete: Bool }

@Guide:- Directs the model on output structure and content constraints.

- Use this macro to refine AI responses for very specific workflows or stricter correctness.

Why does this matter? Freeform text output from traditional LLM APIs is tough to rely on. Mistakes like wrong types, missing fields, or mangled items are common. With these macros, Apple’s framework uses constrained decoding—the AI must fit its answers into your schema before your code runs. That means fewer runtime errors and easier data handling.

Best Practices for Type-Safe AI Workflows

Building with guided generation and type safety brings structure and trust to your features. Here’s how to get the most from these tools:

- Always annotate your expected output. Define Swift structs or enums that match what your app needs. This way, you control the contract between your prompts and your UI logic.

- Break down complex tasks. For larger workflows, split your types. Map out each stage—classification, extraction, summarization—with clear models for each.

- Use enums for controlled vocabularies. If your AI should return one of several choices (like action types), declare that with a Swift enum and annotate it.

- Take advantage of streaming. The Foundation Models API supports streaming responses as “snapshots.” You can show partial completions or update your UI live while the AI works.

Example: Extracting Event Details from Text

@Generable

struct Event {

let title: String

let location: String?

let date: Date?

}

With this, your AI output is always a filled-in Event struct, not a fuzzy paragraph you need to parse or clean.

- Test and verify output often. Use Xcode 26 Playgrounds to try new prompt schemas and make sure the structure matches your app’s needs.

- Handle errors gracefully. If the AI cannot match your type, the framework returns a clear error you can show or log.

Utilizing the @Generable and @Guide macros, you gain a repeatable, testable, and robust workflow. You’ll spend less time cleaning up edge cases and more time building unique app features that truly serve your users.

Mastering these basics is your launchpad for rich, safe AI-powered experiences in iOS 26. With privacy, speed, and clarity baked in, you can confidently push your projects forward using the Apple intelligence Foundation Models API guide and see real value from integrating on-device AI in Swift.

Powerful Real-World Use Cases with Integration Steps and Performance Tips

With the Apple Foundation Models tutorial iOS 26, you can lift your apps from ordinary to extraordinary using the iOS Foundation Models framework integration. Apple Intelligence now brings on-device, low-latency AI right into Swift code, powering new experiences without giving up privacy or speed. Here, you’ll see practical examples that show just how much ground you can cover—from smart messaging to advanced image processing and dynamic tool integrations. Let’s walk through the most requested use cases, step-by-step, with actionable guidance for fast, reliable, and trustworthy app intelligence.

Smart Messaging: Summarization and Auto-Reply

Smart messaging is one of the first places users expect to see intuitive AI, and the on-device LLMs in iOS 26 make these features snappy and private. You can roll out instant message summarization and intelligent auto-replies without sending words to external servers.

Integration Steps:

- Choose On-Device LLM: Use the

.onDevicemodel for sub-second responses and privacy. This supports single-token latencies under 1 ms. - Stream Outputs: Leverage streaming inference for partial summaries or replies as the user reads—useful in chat UIs where immediate feedback matters.

- Type-Safety with Macros: Define a Swift struct for message summaries or reply intent, annotated with

@Generable. For example:@Generable struct MessageSummary { let summary: String let sentiment: Sentiment } - Prompt Design: Use concise, direct prompts. The model works best with clear instructions, e.g., "Summarize the conversation in one or two lines."

- UI Integration: Bind the output to SwiftUI or UIKit views. Use the streaming snapshots API to animate updating summaries or suggestions in real time.

Performance Tips:

- Model Prewarming: Call

.prewarm()before launching a heavy AI-driven screen to reduce first-inference lag. - Minimize Properties: Limit output fields to only what you need. Extra fields can marginally raise inference overhead.

- Latency Benchmarks: Test on real hardware—iPhone 15 Pro and later should deliver 0.6 ms/token for summaries. Profile longer threads if you handle email or document threads.

Key Takeaways:

- Instant summarization and reply suggestions now feel native and private.

- Type-safe output means fewer parsing headaches.

- Users get context-aware help without data ever leaving their device.

Image Recognition and Captioning

Apple Intelligence pushes multimodal AI by combining text and vision tasks, all in a privacy-friendly way. Imagine a camera app that describes scenes or organizes photos by context—now possible at lightning speed and with user trust.

Integration Steps:

- Multimodal Model Call: Use the Foundation Models framework's image input path. Pass

UIImageorCGImageobjects directly. - Define Output Schema: Annotate structures with

@Generablefor captions, tags, or detected objects.@Generable struct ImageCaptioningResult { let caption: String let objects: [String] } - Prompting for Structure: Direct the model with prompts like, "Describe this photo and list up to five objects you see."

- Streaming and Real-Time Feedback: Use streaming APIs to surface partial captions as the image is processed.

- UI Binding: Display results right in your photo picker, gallery, or camera overlay. Update as streaming results come in for a responsive experience.

Privacy and Speed:

- On-Device Processing: No image data leaves the user’s device unless you explicitly opt for private cloud fallback (which always prompts the user).

- Speed Benchmarks: Captioning on high-end Apple silicon should complete in under a second for standard photos. Batch operations scale linearly.

- Data Minimization: Only process and persist user photos if truly needed. Avoid background processing without clear user consent.

Optimization Tips:

- Prefer JPEG/PNG at reasonable resolutions (under 1080p) for fastest assessment.

- Reuse inferences by caching common captions for repeated images.

- Always evaluate accessibility needs; rich captions boost usability for all users.

Key Takeaways:

- On-device multimodal means users’ photos never leave their device.

- Streaming provides immediate, incremental feedback for responsive UIs.

- Type-safe output keeps everything simple for app logic and future features.

Predictive Text Input and Personalization

Predictive typing and next-word suggestions have never felt smarter than with iOS 26 on-device LLM Apple models. Now your apps can offer real-time, context-aware typing help without external dependencies.

Integration Steps:

- Select Predictive Model: Initialize a session with the language model tuned for text prediction. Opt for

.onDeviceto keep latency invisible. - Session Configuration: Use user context (conversation history, document purpose) to seed predictions while maintaining privacy boundaries.

- Define Output:

@Generable struct NextWordPrediction { let predictedWord: String let confidence: Double } - Bind to Text UI: Connect your predictions directly to the iOS system keyboard, a custom SwiftUI text field, or any UI element.

- Personalization: Use short-term user input (session-based memory, not stored across device restarts) to further tune suggestions. Custom adapters can also personalize by app context.

UI Integration Tips:

- Non-Intrusive UI: Show predictions above the keyboard or inline; never interrupt the user.

- Stream as You Type: Display predictions as streaming snapshots update, so suggestions adapt with each keystroke.

- Accessibility: Make sure text suggestions support VoiceOver and dynamic text sizes.

Performance Notes:

- Sub-10ms Prediction: On Apple silicon, text prediction matches or beats system keyboard speeds.

- Memory Best Practices: Flush or rotate session context frequently to avoid stale suggestions.

- Testing: Profile for lag spikes in long-form text or high-frequency taps.

Key Takeaways:

- Smarter, privacy-first typing boosts app usability immediately.

- Streaming and type-safety fit predictive UIs perfectly.

- User context powers meaningful suggestions without privacy trade-offs.

Tool Calling: Extending App Intelligence with External APIs

One hidden superpower of the apple intelligence Foundation Models API guide is type-safe tool calling. With this, your app’s AI can call custom tools—fetching weather, recipes, or controlling smart devices—directly in response to a user’s prompt. This makes your app feel truly intelligent and action-ready.

Integration Steps:

- Define a Custom Tool: Conform your Swift object to the

Toolprotocol—implementingname,description, and the call method that performs your API logic.struct WeatherTool: Tool { let name = "fetchWeather" let description = "Get the current temperature by city." func call(arguments: WeatherRequest) async throws -> WeatherResponse { // Call your weather API here } } - Register with Model Session: Register the tool at session start. Now the LLM can invoke this tool by name, passing structured arguments.

- Guide Input/Output: Use

@Guideor@Generablefor argument and result types, ensuring type safety.@Guide struct WeatherRequest { let city: String } @Generable struct WeatherResponse { let temperature: Double let condition: String } - Prompt Model for Actions: Frame prompts like, “What’s the weather in London right now?” The model decides when to call your tool and pipes the answer into the reply.

Examples of Intelligent Tooling:

- Recipe Fetching: Hook into a recipe API to surface dinner ideas from ingredients in a user’s note.

- Reminders: Register a reminder tool to create or list reminders straight from chat.

- Real-Time Data: Link to Stocks, Health, or Map APIs for live updates.

Performance Tips:

- Async Calls: All tools should run asynchronously. Use Task or async/await to keep UIs fluid.

- Graceful Error Handling: Always define clear outputs for errors—e.g., network failures or invalid queries.

- Limit Tool Access: Only surface tools matching the current user context; don’t overload the model with low-relevance actions.

- Security: Validate inputs in your tool implementations to avoid accidental or malicious misuse.

- Session State: Use persistent context or session memory to track tool usage and streamline dialog.

Key Takeaways:

- Apps can now bridge on-device intelligence and live data with safe, type-checked methods.

- Tool-calling makes AI-powered workflows dynamic, practical, and trustworthy.

- User privacy stays at the forefront because AI calls remain tightly scoped and supervised.

By weaving these Foundation Models framework examples iOS into your app, you are not just making your app smarter but giving your users fast, private, and reliable features that feel ahead of the curve. Whether you’re automating chat, making sense of images, building clever typing aids, or connecting to real-world data, these integration recipes set you up for success.

Optimizing Performance and Privacy in Foundation Models-Powered Apps

Running Apple Foundation Models on iOS 26 doesn't just mean smarter apps—it means balancing speed, efficiency, and privacy every step of the way. The most successful apps aren’t just accurate, they feel instant, and they protect user data from start to finish. Let’s break down proven ways to give users a snappy, private experience when building with the iOS Foundation Models framework integration, focusing on two important areas: latency reduction and privacy-by-design.

Latency and Efficiency: Model Prewarming and Streaming

The feeling of instant response is what makes on-device AI impressive. Nobody wants to wait for answers, especially in feature-rich SwiftUI apps. Here’s how top apps achieve this:

- Model Prewarming: First impressions matter. Initialize your Foundation Model as the user navigates to a screen that needs AI, not after an action is tapped. Use

.prewarm()to get the model ready behind the scenes, especially if you know an AI task is likely. This minimizes the “cold start” delay, bringing your Time-to-First-Token (TTFT) down to almost zero. - Streaming Outputs: Don’t let users stare at a loader. Streaming means AI sends back partial results as it works. With SwiftUI, you can update the interface in real time, like progressive text summaries, live chat responses, or picture captions populating as they’re formed. This improves perceived speed and keeps users engaged.

- Lean Model Design: Only include the properties your UI needs right now. The Apple Foundation Models tutorial iOS 26 shows that even small optimizations—like dropping unused fields from your

@Generablemodels—reduce overhead, boost throughput, and make your inference loop faster.

Practical ways to lower app latency and keep interfaces responsive include:

- Warm up models just-in-time instead of at app launch.

- Use token-level streaming, updating your UI with each new word or result.

- Cache common model outputs if the same data is used frequently.

- Monitor on-device performance—Apple’s quantized models achieve 0.6 ms per token, making it feasible to update even the busiest interfaces instantly.

Build your app to feel “live,” using these model and API features. Users will notice.

Data Privacy, Guardrails, and Local Storage

Apple Foundation Models put privacy front and center. Apps that use on-device AI with Foundation Models framework examples iOS don’t send sensitive data off-device unless you clearly ask users. Here’s how you can sustain that trust and protect user information at every step:

- Privacy-by-Design: The model is trained on strictly filtered data, with personal details and low-quality info removed. On-device inference means prompts, results, and sensitive content never transit the internet or hit remote servers.

- Supervised Fine-Tuning with Adapters: If you want to personalize for specific workflows (like smarter notifications or tailored replies), use Apple’s lightweight adapter modules. These fine-tune the model for your needs without learning private data. Adapters are small, so they add minimal storage or memory overhead.

- Responsible AI Guardrails: Apple builds in automatic content filtering, strict user data boundaries, and bias checks. These safety features run at both the model and framework level. You can add prompts or constraints with

@Guidemacros to further ensure outputs fit your brand and values. - Constrained Decoding: Rather than returning free-form, unpredictable text, the Foundation Models framework guarantees structural correctness by matching AI answers to your predefined Swift types. This means fewer surprises, less risk of data leakage, and total control over what your app presents.

- Local Storage of AI Outputs: Want to keep generated summaries, captions, or AI-built data offline? Store it safely using SwiftData or CoreData. Best practices include:

- Encrypt any sensitive AI outputs before saving, using Apple’s CryptoKit.

- Keep only the information users need, and regularly clear out unneeded records.

- Never log prompts or outputs containing personal details. Stick to in-memory or ephemeral storage for any private or security-sensitive AI task.

- Make clear in your privacy policy what, if any, AI results are stored on-device.

By following these steps, your app not only feels snappy and modern but also stays in sync with user trust. With Apple’s focus on responsible AI and on-device privacy, you build features that hit all the right notes: intelligent, lightning-fast, and respectful of user data every step of the way.

The next time you’re planning your app’s AI-driven interface, keep these techniques in mind. They’ll help your project shine using the Apple intelligence Foundation Models API guide, and give you peace of mind that your app protects users as much as it surprises them.

Future-Proofing Your iOS Apps with Apple Intelligence and Foundation Models

Developers building with the Apple Foundation Models framework on iOS 26 are stepping into a future where smart, private, and lightning-fast app experiences are standard. Foundation Models, packed with Apple Intelligence, are reshaping how apps think and respond—and the best part is, this is just the beginning. Apple’s push for on-device AI in Swift, updates to the API, and a clear focus on privacy and performance mean your apps will never feel left behind. Staying current with the system’s direction keeps your app relevant, no matter how quickly technology changes.

How Foundation Models Are Evolving

Apple is moving fast with its Foundation Models framework. The core model, with around 3 billion parameters, was only the starting point. Apple’s updates now use advanced designs like parallel track mixture-of-experts (PT-MoE) and interleaved attention for handling longer inputs at record speed. You get support for more languages and better cross-task reasoning right out of the box, thanks to training not only on massive text data but also a huge set of image-text pairs.

The secret sauce is Apple’s focus on privacy and safety without sacrificing power. The on-device AI models are now running with up to 60% lower memory use and 40% faster GPU speeds than just a year ago, all optimized for Apple Silicon. Models continue to get faster and smarter—if last year’s AI was a sports car, this year’s is a supersonic jet.

What does it mean for you?

- Instant performance jumps: App updates bring better speed and smarter outputs without code rewrites.

- Ongoing security and privacy: Safety guardrails improve so your app can handle sensitive content reliably.

- Multilingual updates: Automatic support for more languages keeps your app global.

Cross-Platform and System-Level Potential

Apple isn't stopping with iPhone and iPad. The Foundation Models API is designed for the whole Apple ecosystem:

- macOS: Desktop apps get on-device AI for everything from summarizing documents to controlling complex workflows.

- watchOS: Smart, hands-free interactions on Apple Watch, like summarizing messages and voice-suggested replies, are becoming fluid and localized.

- iPadOS: Support for split-screen multitasking means you can run AI-driven features side-by-side with other apps—think quick document checks or visual search while users work elsewhere.

- Vision Pro and beyond: XR and mixed-reality apps can use on-device models for live understanding of the environment, image labeling, or real-time content translation.

Thanks to system-wide APIs like App Intents, Shortcuts, and real-time background tasks, the line between “feature” and “platform” is vanishing. A user’s reminder, calendar, and chat can all flow into your app’s experience with intelligent suggestion, voice control, and predictive actions.

Key cross-platform benefits:

- Single codebase, many devices: One Foundation Models integration in Swift can unlock features across iPhone, iPad, Mac, Watch, and Vision Pro.

- Consistent privacy: On-device inference works everywhere, not just on mobile—your users trust that their data stays on their device.

- Shared AI tools: Universal APIs like Vision, Natural Language, and Speech keep workflows smooth across all screens.

Anticipating New Features and Rapid Updates

Apple rolls out AI improvements at the system level, so your app benefits even as models gain new strengths. With each iOS and macOS update, you might see:

- Better reasoning tasks (planning, summarization, classification)

- Smarter multimodal understanding (images, text, audio)

- Expanded tool-calling abilities for real-time data and automation

- Higher-quality responses with less hallucination and bias

For developers, this means:

- Use the

@Generableand@Guidemacros in your app logic now. As Foundation Models improve, your structured Swift output gets even more accurate with no extra work. - Regularly check for new capabilities in the Apple intelligence Foundation Models API guide, especially after major OS updates.

- Test your apps early with system betas, using Xcode 26’s playgrounds for hands-on experiments.

- Monitor performance and privacy logs—Apple often upgrades how data is handled, and a quick tune-up ensures you keep pace.

Adopting new APIs as they land in the Foundation Models framework keeps your app fresh for users and smooth in the App Store review process.

Staying Current with the Apple Ecosystem

Keeping up with the Apple Foundation Models tutorial iOS 26 and related updates is not a one-time job—it’s an ongoing advantage.

Best habits for future-proofing:

- Subscribe to Apple Developer news and check release notes for the Foundation Models framework.

- Join developer forums or communities sharing best practices in Foundation Models framework examples iOS, often surfacing creative prompts and smarter integration patterns.

- Automate regular testing as new Xcode releases drop, so your app takes full advantage of performance improvements.

- Maintain a clear, privacy-first codebase using on-device settings wherever possible. Apple’s platform rewards apps in compliance with their privacy and safety ethos.

The big takeaway: The smarter your app becomes today, the more ready you’ll be for what’s next. Apple keeps raising the bar for on-device AI, and Swift-based, privacy-centered design lets you meet (or beat) every new expectation. Cross-device support, ongoing model upgrades, and a thriving developer ecosystem mean your app has a strong future—on any screen Apple dreams up next.

Key Takeaways

Taking everything you’ve learned about the Apple Foundation Models tutorial iOS 26, it’s clear these tools let you rethink what your iOS, iPadOS, and macOS apps can do right out of the box. Not only do you get fast, private, and reliable AI, but you also gain new flexibility to deliver richer app experiences. Here are the big lessons and practical highlights every developer should keep in mind for any project involving on-device AI iOS Swift Foundation Models.

On-Device AI Brings Speed and Privacy

Apple’s choice to make Foundation Models run directly on Apple Silicon hardware stands out. Here’s what you can bank on:

- Zero round-trips: All AI tasks (summarization, captioning, tool-calling) happen on the device, so results are near-instant and never wait on a cloud server.

- True data privacy: User content (texts, images, voice) stays local unless a task shifts to private cloud with user consent. Apps using iOS Foundation Models framework integration earn user trust from the start.

- Battery and performance optimized: AI tasks won’t drain the battery or lock up user devices, thanks to custom tuning for Apple hardware.

Swift Macros Change How You Handle AI Data

The biggest workflow shift comes from @Generable and @Guide macros in Swift. These streamline every interaction with the apple intelligence Foundation Models API guide:

- You design the output: Structure expected AI results using simple, type-checked Swift definitions.

- Fewer bugs: No more re-parsing unpredictable AI text. The model delivers exactly what your UI wants, reducing error-prone code.

- Stronger safety nets: Type safety and model guardrails protect both your logic and your users’ experience.

Performance Techniques Make a Noticeable Difference

Great AI features must be fast and feel native. Keep these learnings in your toolkit:

- Model prewarming: Kick off model loading in the background before users start an AI-powered action. Reduces first-use lag dramatically.

- Streaming responses: Use streaming APIs to give users a live, dynamic response as the model works. It feels more responsive and interactive.

- Output field trimming: Only request what’s needed. Slimmer models and outputs cut latency and memory use, making your app lean and agile.

Real App Features Now Run Smarter and Safer

Reviewing Foundation Models framework examples iOS, you’ll find features like summarization, categorization, real-time captions, and tool-triggered automations all working with:

- On-device processing: The majority of use cases run without needing internet access, from smart messaging to instant image labeling.

- Cloud fallback (by permission): More complex or heavy jobs can upscale to Apple’s secure private cloud, but always clearly flagged.

- Universal integration: One AI workflow supports iPhone, iPad, Mac, Watch, Vision Pro, and future Apple platforms with a single codebase.

System-Level Support Future-Proofs Your Apps

Foundation Models keep evolving, and so will your app’s AI features if you:

- Follow API updates: Apple regularly boosts speed, expands language and image support, and tightens privacy guardrails. Keeping current future-proofs your work.

- Embrace system features: Use App Intents, Shortcuts, and background tasks for deeper integration and automation. Your AI-powered features feel native everywhere.

Rules for Responsible AI

The Apple Foundation Models tutorial iOS 26 is as much about user trust as it is about power. Keep these best practices close:

- Respect local processing: On-device first, cloud only with transparency and consent.

- Handle user data with care: Never store or log sensitive AI outputs unless needed and always encrypt when you do.

- Rely on Swift type safety: Always match structure and guard against the unexpected in your logic.

Quickfire Recap

Here’s a snapshot for your next project checklist:

- Start with on-device models for privacy and speed.

- Structure your AI input/output using Swift macros.

- Use model prewarming and streaming for top-notch UX.

- Let users know when cloud or server is needed, keeping privacy at the center.

- Regularly check for Apple’s API and framework updates.

By baking these lessons into your workflow, your apps will define what smart, safe, and user-friendly AI looks like on every Apple device. If you’re building with the iOS Foundation Models framework integration, every one of these takeaways will save you time, boost app quality, and help set your work apart.

Frequently Asked Questions: Apple Foundation Models and On-Device AI for iOS 26

The Apple Foundation Models framework brings a lot of power and flexibility to your iOS apps, but naturally, developers have plenty of questions about how it all works. This section highlights the most common FAQs about the Apple Foundation Models tutorial iOS 26, on-device AI iOS Swift Foundation Models, and more—ensuring you have real answers before integrating or scaling up your own projects.

Here’s a breakdown of top questions—from technical choices to privacy, performance, and practical usage in real-world apps.

What Are Apple Foundation Models and How Are They Used in iOS 26?

Apple Foundation Models are large language and vision models built to run both on-device and in Apple’s secure private cloud. These models support a range of AI tasks, including text summarization, structured extraction, classification, conversational chat, image captioning, and even automation through tool-calling.

Key uses in iOS 26 include:

- On-device LLM for fast, private AI (like summarizing messages and extracting useful info)

- Multimodal understanding for working with both language and images together

- Dynamic tool invocation so your app can trigger custom functions in response to a user prompt

Swift developers tap into these models using the iOS Foundation Models framework integration, offering a blend of strong privacy, high speed, and flexible API design.

Does On-Device AI Mean My App Data Stays Private?

Yes, that’s the design choice at the heart of Apple Intelligence. When you use Foundation Models on-device, user data never leaves the device for most AI tasks. Only when you opt into calling Apple’s Private Cloud Compute—for more advanced features or bigger jobs—might data leave the device, and even then it’s kept encrypted and protected with Apple’s strict privacy rules.

For privacy-focused apps, always use .onDevice inference unless you clearly need cloud scaling or special context.

What Are the @Generable and @Guide Swift Macros?

Apple introduced two special Swift macros:

@Generable: This macro helps you define a concrete Swift type the Foundation Model must fill with its answer. The model returns type-safe, structured data—making it easy to bind results to your app UI.@Guide: Use this macro to give even tighter constraints and instructions to the AI, guiding how it formats or limits its response.

Why does this matter? These macros prevent the headache of parsing unpredictable model output. Instead, you get clean, reliable Swift data every time, with fewer bugs and better user experiences.

Can I Use Foundation Models for Both Text and Images?

Absolutely. One of the coolest upgrades in Apple’s new framework is built-in multimodal support. This means your app can:

- Understand and summarize text (like emails, messages, or documents)

- Analyze images (describe content, identify objects, generate captions)

- Combine inputs (give your model both an image and a question, and get back a structured answer)

Simply pass either text or image input to the appropriate API endpoint, and receive structured results back, guided by your output type.

How Fast Is On-Device AI Inference on Apple Silicon?

Apple Silicon (M-series chips and A17 Pro or newer) delivers near-instant inference:

- Around 0.6 milliseconds per token on iPhone 15 Pro for standard summarization or generation tasks

- Full-image captioning commonly completes in under one second for regular photos

- Streaming responses mean partial results appear live as the model works, so your UI feels even faster

For best speeds, limit your output fields to just what your UI needs, and prewarm the model right before expected use.

What About Cloud Fallback—Will My App Randomly Switch to the Cloud?

No, not unless you allow it. As the developer, you control how inference runs:

- Force

.onDevicefor private, local inference only - Set

.automaticto let the framework intelligently choose the best model, only falling back to cloud if needed (e.g., very large prompts or longer context windows) - Your app’s user must approve cloud-based Apple Intelligence features on-device

Cloud fallback provides a “best of both worlds” option, scaling up only when local hardware hits its limits.

How Do I Personalize My App’s AI Without Compromising Privacy?

You can use Apple’s adapter modules—small files that fine-tune behavior for individual workflows (like notification filtering, specialized chat replies, etc). These adapters update model behavior without retaining or sending private user data off-device.

Best tips for personalization:

- Use adapters for simple task tuning

- Avoid storing full user histories or prompts in local or remote storage unless crucial (always encrypt if storing)

- Reset session context regularly for sensitive workflows

This keeps user data safe while letting your app feel more personal and helpful.

Can Foundation Models Integrate with Other iOS Features and APIs?

Yes, deeply. Apple designed the Foundation Models framework to play nicely with:

- App Intents and Shortcuts (trigger actions and automations)

- SwiftUI and UIKit (stream responses into any UI element)

- System APIs (fetch live data—weather, health, maps—using tool-calling)

You can register custom “tools” the model can call by name, making your app’s unique features AI-accessible from structured prompts.

Are There Any Special Beta or Hardware Requirements?

For iOS 26 on-device LLM Apple features, you need:

- iOS 26 or later (developer beta for early adopters)

- Latest Xcode 26 to access the Foundation Models Swift API and macros

- Apple Silicon devices (A17 Pro, M1 or newer for optimal performance)

- Enable Apple Intelligence in iOS settings during development and testing

Some tasks, like serving very large contexts or batch processing, may run faster or only be available on newer devices or with private cloud fallback.

How Do I Handle Errors or Unexpected Output from The Model?

Foundation Models framework gives you clean error handling:

- If output can’t be made to fit your

@Generabletype, you get a clear error with debugging info. - Streaming snapshot APIs let you track the model’s progress and handle mid-task issues smoothly.

- For tool-calling, always wrap your call logic with defensive checks and friendly user messages.

This approach minimizes crash risk and gives you full control over your app’s experience.

What About Responsible AI, Safety, and Bias?

Apple’s Foundation Models come with strong built-in guardrails:

- Training data excludes personal and sensitive content

- Outputs pass through filters for safety, bias, and appropriateness

- You can add extra constraints using the Swift macros or session prompts

- Apple regularly updates the models with feedback-driven and region-specific safety data

For apps in regulated industries, read Apple’s developer docs for detailed guidelines and compliance notes.

If you have more specific questions as you work through the Apple Foundation Models tutorial iOS 26 or build new Foundation Models framework examples iOS, always check the Apple Developer Documentation, active WWDC videos, and developer forums for the latest real-world tips. Keeping these FAQs handy will help you build smarter, safer, and more user-friendly on-device AI iOS Swift Foundation Models right from the start.

Conclusion

Apple Foundation Models give you clear benefits for building modern, intelligent iOS apps. By choosing on-device AI, you gain unmatched speed, keep user data private, and reduce reliance on remote servers. Simple Swift integration cuts development time, while safety guardrails and type-safe outputs help you avoid surprises.

These models support real features like summarization, image understanding, tool-calling, and hands-free automation right inside your apps. Apple’s focus on privacy and efficiency means trust and performance are part of every release, keeping your app strong as technology moves ahead.

Start using the iOS Foundation Models framework integration today to make your apps smarter and more secure. Review the apple intelligence Foundation Models API guide and explore Foundation Models framework examples iOS for inspiration. Building with iOS 26 on-device LLM Apple, you’ll stand out and shape the next wave of user-friendly, privacy-first apps.

Thanks for reading. If you have ideas or want to see more, share your thoughts and help grow the community using on-device AI iOS Swift Foundation Models.